publications

Main author only, Reversed chronological order.

2025

-

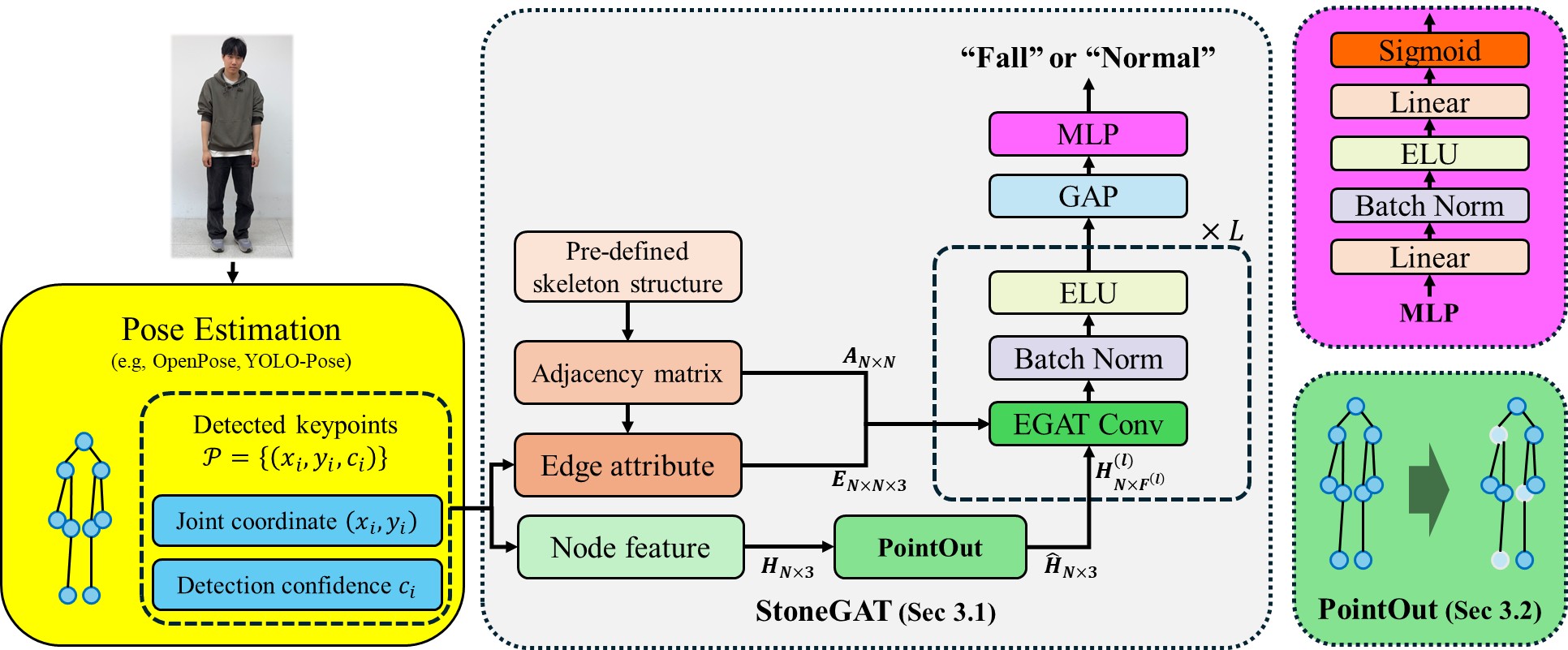

StoneGAT: A Robust Fall Detection Framework via Skeleton-aware Graph Attention NetworksSoeun Chun, Seokjun Song, Doyeop Lee, and Sangyun LeeInternational Journal of Control, Automation, and Systems, Dec 2025

StoneGAT: A Robust Fall Detection Framework via Skeleton-aware Graph Attention NetworksSoeun Chun, Seokjun Song, Doyeop Lee, and Sangyun LeeInternational Journal of Control, Automation, and Systems, Dec 2025Robust fall detection is critical in safety-sensitive contexts such as elderly care and industrial environments. Recent fall detection methods leverage 2D human pose estimation, which encodes posture as compact skeletal keypoints. However, these systems are vulnerable to performance degradation when body parts are occluded or keypoints are missing, often due to environmental constraints or inaccuracies in pose estimation. To address this, we propose a fall detection framework based on single-frame 2D human pose estimation and a skeleton-aware graph attention network (StoneGAT). StoneGAT enhances conventional graph attention network (GAT) by incorporating edge features based on bone-related information, such as bone lengths, joint angles, and confidence-based metrics. In addition, we introduce a training strategy named PointOut, which probabilistically drops node features during training to encourage structure-aware learning, thereby improving model robustness. Experiments on a combined dataset of AI-hub and in-house dataset demonstrate that StoneGAT with PointOut outperforms baseline models, including standard MLP, graph convolutional network (GCN) and GAT, especially under severe occlusion. Ablation studies confirm the effectiveness of edge attributes and the PointOut strategy.

@article{stonegat_ijcas, author = {Chun, Soeun and Song, Seokjun and Lee, Doyeop and Lee, Sangyun}, title = {StoneGAT: A Robust Fall Detection Framework via Skeleton-aware Graph Attention Networks}, journal = {International Journal of Control, Automation, and Systems}, year = {2025}, volume = {23}, number = {12}, pages = {3702--3713}, month = dec, issn = {1598-6446, 2005-4092}, doi = {https://doi.org/10.1007/s12555-025-0546-z}, url = {https://ijcas.org/journal/view.html?doi=10.1007/s12555-025-0546-z}, publisher = {The International Journal of Control, Automation, and Systems}, language = {eng}, keywords = {Edge attribute, fall detection, graph attention network, pose estimation, posture recognition}, } - StoneGAT: Skeleton-aware Graph Attention Networks for Robust Fall Detection from Single-frame PosesSoeun Chun, Seokjun Song, Doyeop Lee, and Sangyun LeeIn 2025 25th International Conference on Control, Automation and Systems (ICCAS), Nov 2025

-

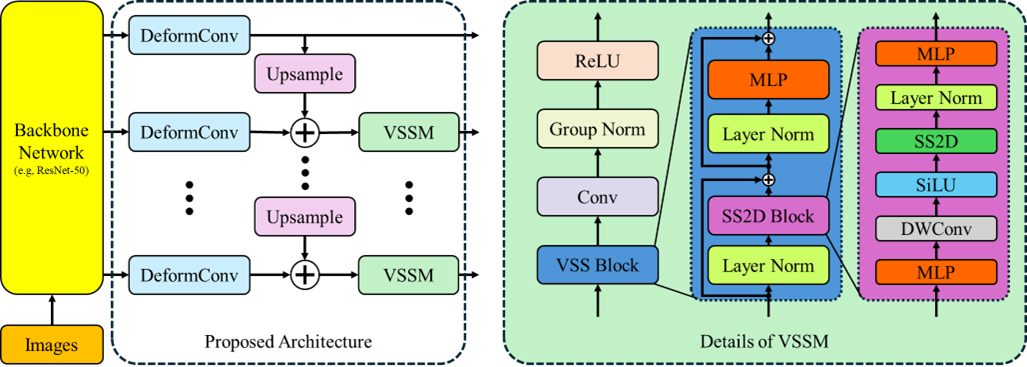

Feature Refinement With Vision State Space Modules for Tiny Object DetectionSangyun LeeJournal of Institute of Control, Robotics and Systems, Jul 2025

Feature Refinement With Vision State Space Modules for Tiny Object DetectionSangyun LeeJournal of Institute of Control, Robotics and Systems, Jul 2025Tiny object detection (TOD) plays a vital role in robotics and control systems, particularly in applications such as dronebased surveillance, autonomous navigation, and intelligent monitoring. Detecting extremely small objects in aerial imagery poses significant challenges due to limited spatial detail and insufficient contextual information. While feature pyramid networks (FPNs) have proven effective in handling multi-scale features, conventional FPN-based approaches often suffer from reduced spatial precision and semantic richness, factors critical for reliable tiny object detection. To address these limitations, we propose an enhanced FPN architecture that integrates deformable convolution (DeformConv) and vision state space modules (VSSM). DeformConv replaces standard fixed-kernel convolutions in the lateral connections, enabling adaptive focus on sparse spatial locations to better capture geometric variations and fine-grained spatial features. In parallel, VSSM modules are incorporated into the top-down pathway to model long-range contextual dependencies, thereby mitigating the semantic information loss typically encountered in traditional FPNs. Experiments conducted on the VisDrone2019 and AI-TOD v2 datasets demonstrate that the proposed architecture consistently outperforms conventional FPN-based detectors, validating the robustness and effectiveness of our feature refinement strategy for tiny object detection.

@article{vssmfpn, title = {Feature Refinement With Vision State Space Modules for Tiny Object Detection}, journal = {Journal of Institute of Control, Robotics and Systems}, volume = {31}, number = {7}, pages = {797-801}, year = {2025}, month = jul, issn = {1976-5622}, doi = {https://doi.org/10.5302/J.ICROS.2025.25.0119}, author = {Lee, Sangyun}, keywords = {tiny object detection, feature pyramid network, vision state space model, mamba}, } -

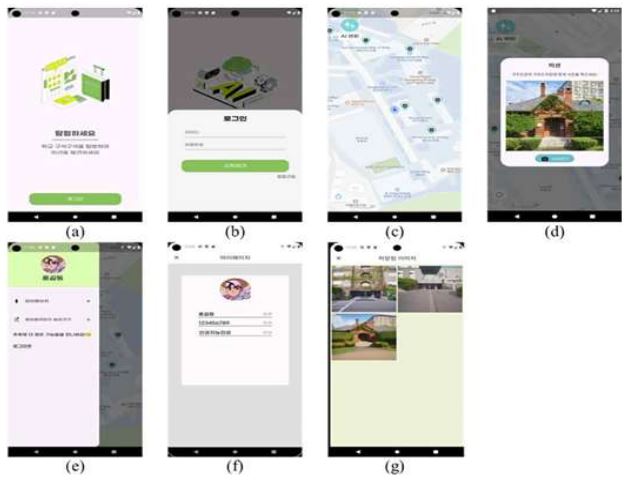

DeepCampus: A Campus Tour Mobile Application Using Deep Learning-Based Vision TechnologiesMujae Park, Yun A Kim, Heeju Cha, and Sangyun LeeThe Transactions of the Korean Institute of Electrical Engineers, Jan 2025

DeepCampus: A Campus Tour Mobile Application Using Deep Learning-Based Vision TechnologiesMujae Park, Yun A Kim, Heeju Cha, and Sangyun LeeThe Transactions of the Korean Institute of Electrical Engineers, Jan 2025This study proposes a campus tour mobile application called DeepCampus that leverages deep learning-based vision technologies to enhance user engagement and interaction. The proposed application introduces two core features: a photo mission, where users take photos at designated campus locations, and a commemorative photo creation function that transforms these photos into character-based styles. The photo mission relies on a CNN-based place recognition model, trained on a custom-built campus dataset, to verify mission completion. The commemorative photo creation function uses a diffusion model to generate personalized, stylized photos, providing users with unique records of their campus experience. By integrating place recognition and generative models, the application encourages active exploration. The effectiveness of the proposed system is validated through experiments on the custom dataset, demonstrating its technical reliability and potential to enhance campus tours.

@article{deepcampus, title = {DeepCampus: A Campus Tour Mobile Application Using Deep Learning-Based Vision Technologies}, journal = {The Transactions of the Korean Institute of Electrical Engineers}, volume = {74}, number = {1}, pages = {127-134}, month = jan, year = {2025}, issn = {1975-8359}, doi = {https://doi.org/10.5370/KIEE.2025.74.1.127}, author = {Park, Mujae and Kim, Yun A and Cha, Heeju and Lee, Sangyun}, keywords = {Campus tour, Mobile application, Place recognition, Generative models, Commemorative photo}, } -

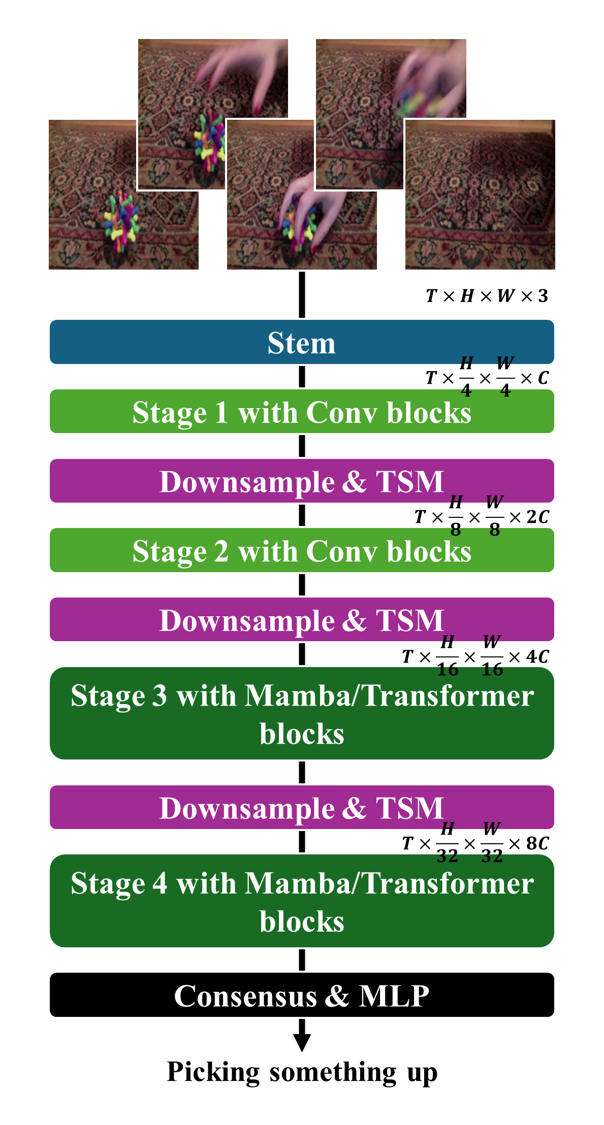

Making Mamba Vision Temporal: Leveraging TSM for Efficient Video UnderstandingSeung Woo Kwak, Sungjun Hong, and Sangyun LeeIn 2025 International Conference on Electronics, Information, and Communication (ICEIC), Jan 2025

Making Mamba Vision Temporal: Leveraging TSM for Efficient Video UnderstandingSeung Woo Kwak, Sungjun Hong, and Sangyun LeeIn 2025 International Conference on Electronics, Information, and Communication (ICEIC), Jan 2025Transformer and Mamba-based models have shown promising results in field of video understanding. However, their excessive complexity often leads to the problem of computational overhead and memory explosion. To address this issue, we propose a novel model called Mamba VisionTSM, which integrates the MambaVision architecture with the Temporal Shift Module (TSM). TSM has been effectively used to enable temporal modeling in 2D CNN models. In this work, we extend its application to the hybrid Mamba Vision model, allowing it to efficiently process temporal information while retaining the long-range spatial dependency capabilities of Mamba Vision. Through experimental results on the Something-Something V2 dataset, we demonstrate that our approach achieves comparable or superior performance compared to existing Transformer-based approaches in action recognition, highlighting its potential for broader video understanding tasks.

@inproceedings{mambavisiontsm, author = {Kwak, Seung Woo and Hong, Sungjun and Lee, Sangyun}, booktitle = {2025 International Conference on Electronics, Information, and Communication (ICEIC)}, title = {Making Mamba Vision Temporal: Leveraging TSM for Efficient Video Understanding}, year = {2025}, volume = {}, number = {}, pages = {1-3}, keywords = {Computational modeling;Computer architecture;Transformers;Explosions;Computational efficiency;Complexity theory;Convolutional neural networks;Optimization;MambaVision;Temporal Shift Module (TSM);Video Understanding;Action Recognition;Hybrid Models}, doi = {https://doi.org/10.1109/ICEIC64972.2025.10879687}, issn = {2767-7699}, month = jan, }

2024

-

Making TSM better: Preserving foundational philosophy for efficient action recognitionSeok Ryu, Sungjun Hong, and Sangyun LeeICT Express, Jan 2024

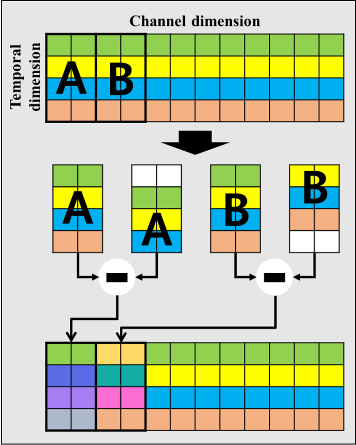

Making TSM better: Preserving foundational philosophy for efficient action recognitionSeok Ryu, Sungjun Hong, and Sangyun LeeICT Express, Jan 2024In this study, we present the Discriminative Temporal Shift Module (D-TSM), an enhancement of the Temporal Shift Module (TSM) for action recognition. TSM has limitations in capturing intricate temporal dynamics due to its simplistic feature shifting. D-TSM addresses this by introducing a subtraction operation before the shifting. This enables the extraction of discriminative features between adjacent frames, thereby allowing for effective action recognition where subtle motions serve as crucial cues. It preserves TSM’s foundational philosophy, prioritizing minimal computational overhead and no additional parameters. Our experiments demonstrate that D-TSM significantly improves performance of TSM and outperforms other leading 2D CNN-based methods.

@article{RYU2024570, title = {Making TSM better: Preserving foundational philosophy for efficient action recognition}, journal = {ICT Express}, volume = {10}, number = {3}, pages = {570-575}, year = {2024}, issn = {2405-9595}, doi = {https://doi.org/10.1016/j.icte.2023.12.004}, url = {https://www.sciencedirect.com/science/article/pii/S2405959523001625}, author = {Ryu, Seok and Hong, Sungjun and Lee, Sangyun}, keywords = {Action recognition, Gesture recognition, Temporal modeling, Temporal shift module}, }

2023

-

D-TSM: Discriminative Temporal Shift Module for Action RecognitionSangyun Lee, and Sungjun HongIn 2023 20th International Conference on Ubiquitous Robots (UR), Jun 2023

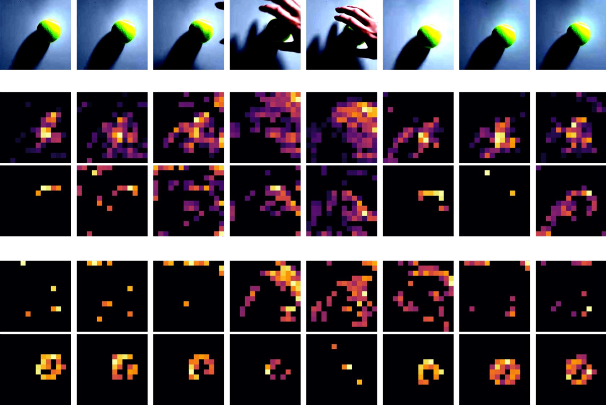

D-TSM: Discriminative Temporal Shift Module for Action RecognitionSangyun Lee, and Sungjun HongIn 2023 20th International Conference on Ubiquitous Robots (UR), Jun 2023Action recognition is one of the representative perception tasks for robot application, but it still remains challenging due to complex temporal dynamics. Although temporal shift module (TSM) has been considered to be one of the best 2D CNN based architecture for temporal modeling, its inherent structural simplicity limits performance and has room for improvement. To mitigate this issue while following TSM’s philosophy, this paper presents a variant of TSM, termed as Discriminative TSM (D-TSM), with a focus on capturing dis-criminative features for motion pattern. Going further from the naive shift operation in TSM, our D-TSM explicitly transforms shifted features by applying element-wise subtraction. This simple approach is effective to create discriminative features between adjacent frames with a small extra computational cost and zero parameter. The experiments on Something-Something and Jester datasets demonstrate that our D-TSM outperforms TSM and achieves competitive performance with low FLOPs against other methods.

@inproceedings{10202338, author = {Lee, Sangyun and Hong, Sungjun}, booktitle = {2023 20th International Conference on Ubiquitous Robots (UR)}, title = {D-TSM: Discriminative Temporal Shift Module for Action Recognition}, year = {2023}, volume = {}, number = {}, pages = {133-136}, keywords = {}, doi = {10.1109/UR57808.2023.10202338}, issn = {}, month = jun, }

2022

-

Extended Siamese Convolutional Neural Networks for Discriminative Feature LearningSangyun Lee, and Sungjun HongInternational Journal of Fuzzy Logic and Intelligent Systems, 2022

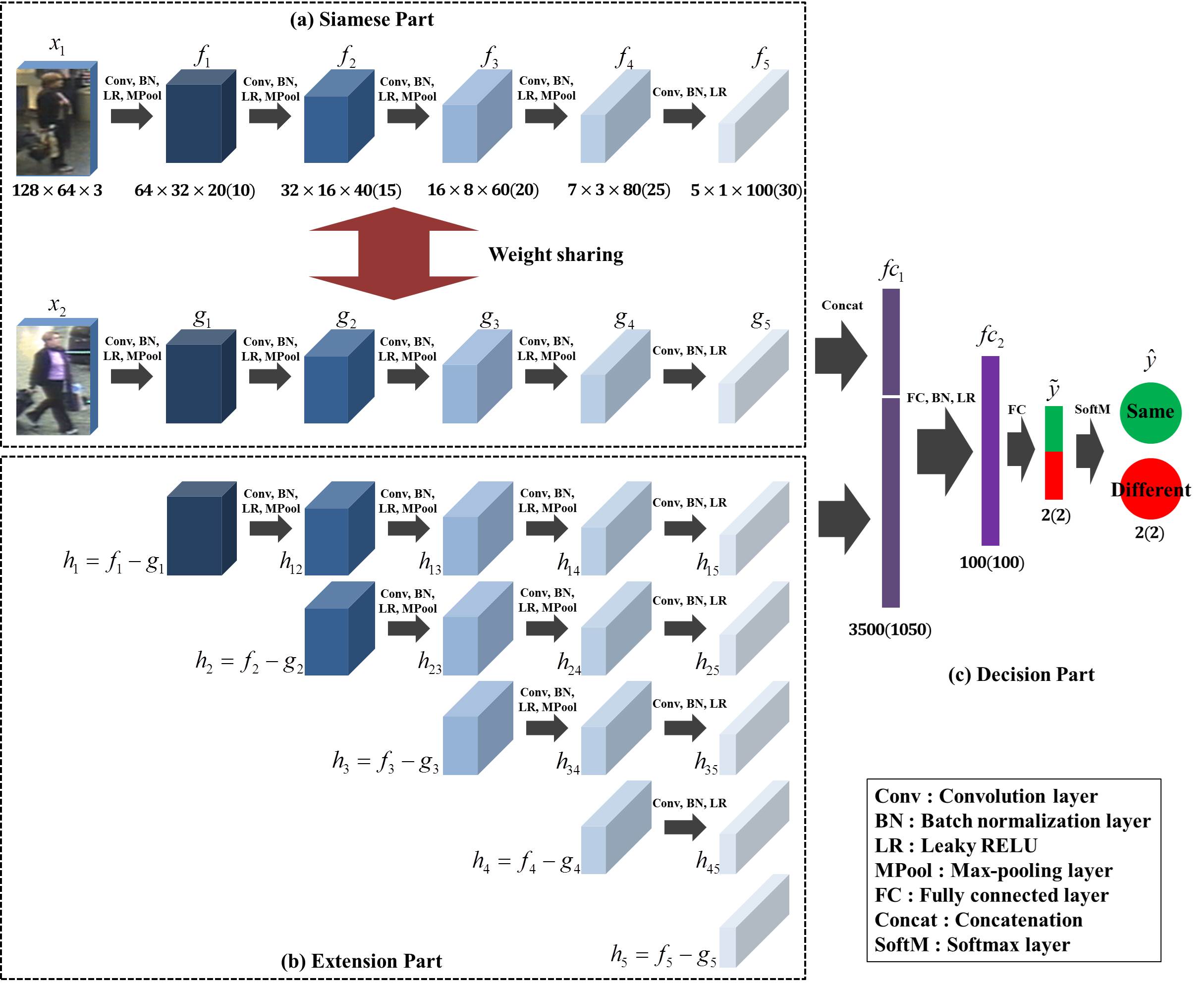

Extended Siamese Convolutional Neural Networks for Discriminative Feature LearningSangyun Lee, and Sungjun HongInternational Journal of Fuzzy Logic and Intelligent Systems, 2022Siamese convolutional neural networks (SCNNs) has been considered as among the best deep learning architectures for visual object verification. However, these models involve the drawback that each branch extracts features independently without considering the other branch, which sometimes lead to unsatisfactory performance. In this study, we propose a new architecture called an extended SCNN (ESCNN) that addresses this limitation by learning both independent and relative features for a pair of images. ESCNNs also have a feature augmentation architecture that exploits the multi-level features of the underlying SCNN. The results of feature visualization showed that the proposed ESCNN can encode relative and discriminative information for the two input images at multi-level scales. Finally, we applied an ESCNN model to a person verification problem, and the experimental results indicate that the ESCNN achived an accuracy of 97.7%, which outperformed an SCNN model with 91.4% accuracy. The results of ablation studies also showed that a small version of the ESCNN performed 5.6% better than an SCNN model.

@article{lee2022extended, title = {Extended Siamese Convolutional Neural Networks for Discriminative Feature Learning}, author = {Lee, Sangyun and Hong, Sungjun}, journal = {International Journal of Fuzzy Logic and Intelligent Systems}, volume = {22}, number = {4}, pages = {339--349}, year = {2022}, month = {}, publisher = {Korean Institute of Intelligent Systems}, }

2019

-

Multiple Object Tracking via Feature Pyramid Siamese NetworksSangyun Lee, and Euntai KimIEEE Access, 2019

Multiple Object Tracking via Feature Pyramid Siamese NetworksSangyun Lee, and Euntai KimIEEE Access, 2019When multiple object tracking (MOT) based on the tracking-by-detection paradigm is implemented, the similarity metric between the current detections and existing tracks plays an essential role. Most of the MOT schemes based on a deep neural network learn the similarity metric using a Siamese architecture, but the plain Siamese architecture might not be enough owing to its structural simplicity and lack of motion information. This paper aims to propose a new MOT scheme to overcome the existing problems in the conventional MOTs. Feature pyramid Siamese network (FPSN) is proposed to address the structural simplicity. The FPSN is inspired by a feature pyramid network (FPN) and it extends the Siamese network by applying FPN to the plain Siamese architecture and by developing a new multi-level discriminative feature. A spatiotemporal motion feature is added to the FPSN to overcome the lack of motion information and to enhance the performance in MOT. Thus, FPSN-MOT considers not only the appearance feature but also motion information. Finally, FPSN-MOT is applied to the public MOT challenge benchmark problems and its performance is compared to that of the other state-of-the-art MOT methods.

@article{8587153, author = {Lee, Sangyun and Kim, Euntai}, journal = {IEEE Access}, title = {Multiple Object Tracking via Feature Pyramid Siamese Networks}, year = {2019}, volume = {7}, number = {}, pages = {8181-8194}, keywords = {}, doi = {10.1109/ACCESS.2018.2889442}, issn = {2169-3536}, month = {}, }

2017

-

Robust adaptive synchronization of a class of chaotic systems via fuzzy bilinear observer using projection operatorSangyun Lee, Mignon Park, and Jaeho BaekInformation Sciences, 2017

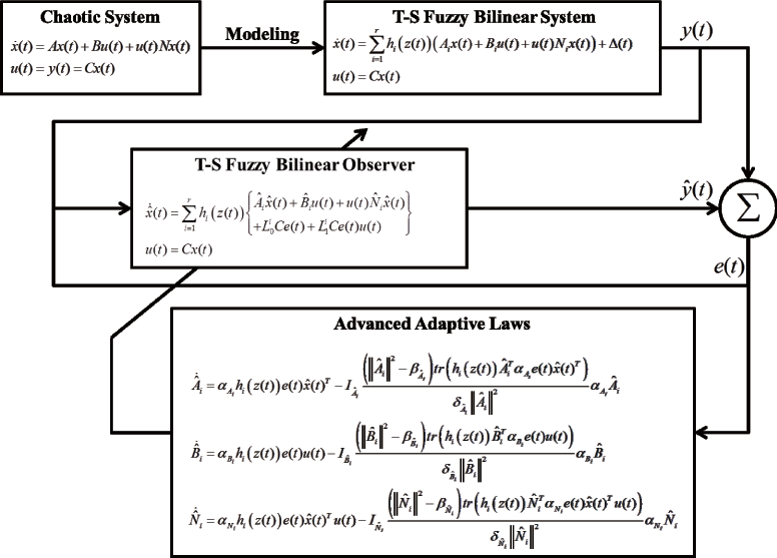

Robust adaptive synchronization of a class of chaotic systems via fuzzy bilinear observer using projection operatorSangyun Lee, Mignon Park, and Jaeho BaekInformation Sciences, 2017This study focuses on the problem of robust adaptive synchronization of uncertain bilinear chaotic systems. A Takagi–Sugeno fuzzy bilinear system (TSFBS) is employed herein to describe a bilinear chaotic system. A robust adaptive observer, which estimates the states of the TSFBS, is also developed. Advanced adaptive laws using a projection operator are designed to achieve both the robustness for the external disturbances and the adaptation of unknown system parameters. A comparison with the existing observer shows that the proposed observer can achieve a faster parameter adaptation and a robust synchronization for an uncertain TSFBS with disturbances when the adaptive laws are utilized. The asymptotic stability and the robust performance of the error dynamics are guaranteed by some assumptions and the Lyapunov stability theory. We verify the effectiveness of the proposed scheme using examples of the generalized Lorenz system in various aspects.

@article{LEE2017182, title = {Robust adaptive synchronization of a class of chaotic systems via fuzzy bilinear observer using projection operator}, journal = {Information Sciences}, volume = {402}, pages = {182-198}, year = {2017}, issn = {0020-0255}, doi = {https://doi.org/10.1016/j.ins.2017.03.004}, url = {https://www.sciencedirect.com/science/article/pii/S0020025517305777}, author = {Lee, Sangyun and Park, Mignon and Baek, Jaeho}, keywords = {Chaos synchronization, Robust adaptive observer, Bilinear system, Takagi–Sugeno fuzzy system, Projection operator}, }